The future is now, eh?

Whenever I see people frustrated by their phone being slow or, God forbid, internet interruption, I wonder, do they realize how advanced our civilization is? How a smartphone, a device that everyone had in their pocket 30 years ago, would shock people to the level of epileptic seizures. And now it is a norm for all. People do not look at modern technology in awe, but I think they should. There is happiness in it.

I try to explain to my daughters (4 and 6 years old Canadian-born spoils) how things were when I was their age. Their childhood is middle-class kids in modern Vancouver, and mine was 30 years ago in a village in just collapsed Soviet Union's Ukraine. And I don't think they believe me or can comprehend the colossal gap between my childhood and theirs.

My parents were engineers, graduated with perfect grades, and were top of their class. They were sent to a village in the middle of nowhere to work on a sugar plant for that achievement. That is where I spent the first ten years of my life. In the early 90s, the economic system went from "We (communist party) know exactly what everyone needs and the people (communist party) own everything" to "Oh shit, now what?" Our village had precisely one store, and that store had one type of bread twice a week and nothing else. My family did not own a car, so a rotary phone, monochrome TV (I could not explain that to my kids), radio, and a tape player were all technology we had. Oh yeah, and the lightbulbs, can't forget those.

The first time I saw a computer was around 1995, but before that, I remember imagining what a computer was and what it could do. But I first heard about it when I was about five (~1991 ish). My imagination exploded. I thought it could do everything. I thought, for instance, ask it to do my homework, and it will do it. Of course, in my imagination, my interaction with the machine would be magical, I would say words, and it will do the things I wanted. Then in 1995, I realized it couldn't do that, but I could play video games, so I could just skip the homework altogether.

And now this

I do not think that, at this point, anyone working in tech could have missed the "digital images from natural language descriptions" trend. It has already caused controversies and has been all over the news for a while. In case you did miss it, though, it is the new magic when you describe an image, and a computer makes it real. I know we are all used to speech recognition, self-driving cars, and AI writing programming code, so text to image is "eh." But the last couple of years, when a thing like that appeared, I am trying to stop and think about it from the perspective of that five years boy that just heard about a computer.

I love the Alien movie from 1979. The one with Sigourney Weaver as the non-super-steroid pumped, non-over-the-board protagonist. Besides the fact that it is fantastic at every level, it is also very old at this point. So in 1979, Ridley Scott and co imagined that people in a far, far future would be interacting with computers using clunky, text-based, monochrome interfaces in a dedicated room covered with all look alike buttons. That was the daring view of the far future. That was high future tech then:

Just think for a second about where we are now:

- You don't need a map anymore to move around. Your map knows where you are, how long it will take you to get somewhere, and whether that place is open.

- You can translate human speech as if you have a professional interpreter with you that is fluent in all major languages.

- You can point your phone at a plant, insect, or anything. It will tell you what it is and provide years' worth of reading on that subject.

- You can just say words, and lights in a room will go "warm tone and set to 73.5% brightness" because that is how you fancy it now.

- You can instantly talk to someone on the other side of the planet and see them in high definition.

- Etc. There is so much more that can be on that list.

And now you can imagine some phrase, and it will magically appear in front of you in a matter of seconds. You know, I wish people would do this more often. Just stop and think how magical our world is.

How do I use it?

I have tried three options so far:

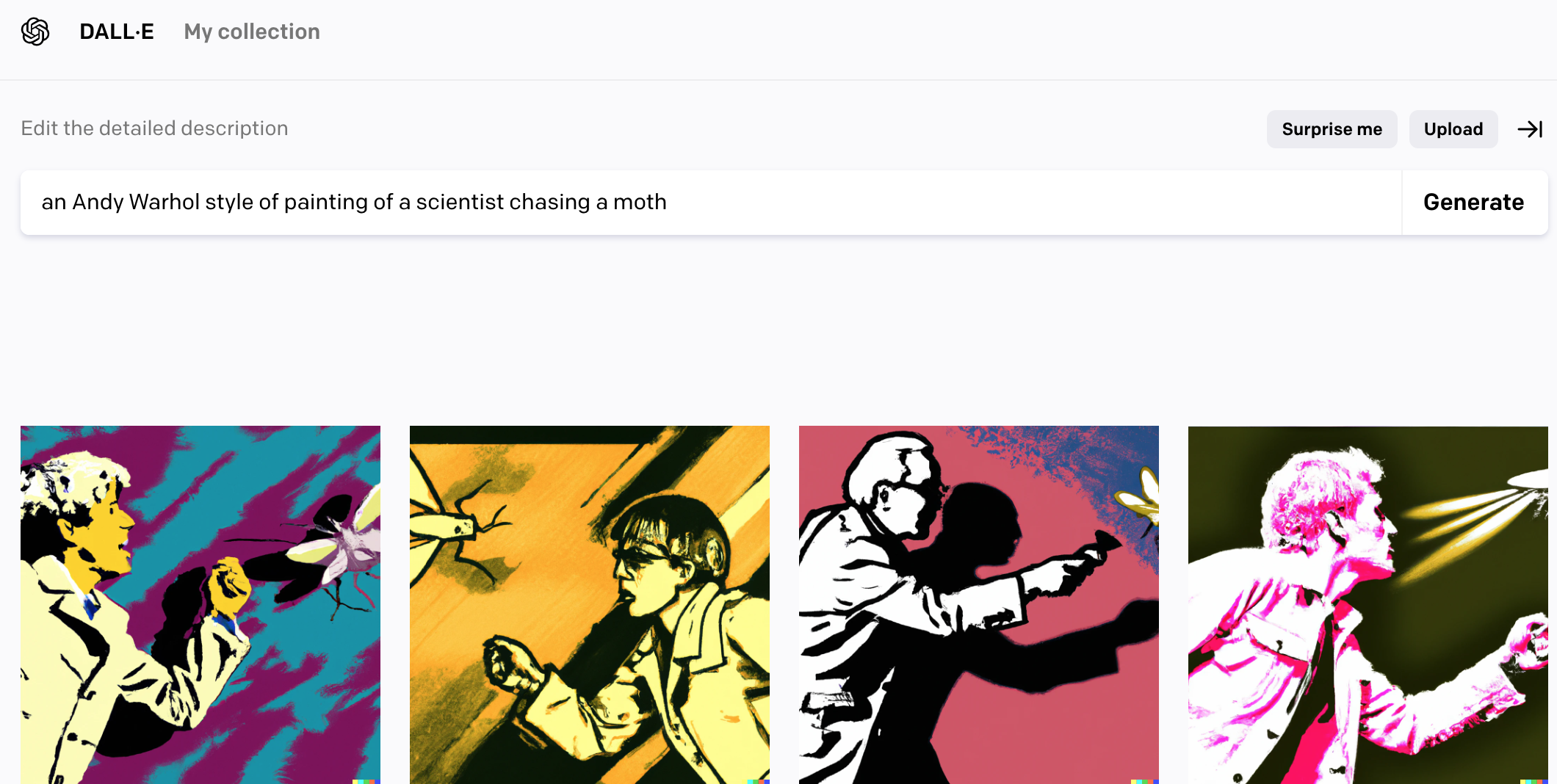

DALL-E 2

DALL-E 2 is in closed beta as of the time of this article. I applied for access and got it within a week. This one is the most user-friendly of the three tools I have (tried) to use. If you are not technical or very motivated, that is your only option for now. The interface is a masterpiece: a text field for the prompt of the image and a "Generate" button. I love it! I was given 50 or 60 "credits" for free and then 15 "credits" every month. One "credit," and one press on the "Generate" button.

The service generates four images out of one query. That seems to be a "norm," as Midjorney does the same. You can download pictures, request variations of an image, and edit it. The variation will create more images off of the one you requested.

From around 50 generates I tried, which is definitely not representative, I felt DALL-E is good at capturing the intent. But many images come out "unfinished." They lack some sort of polish to it.

I also tried to put just an abstract sentence into it and see what is going to happen, so I put the name of my most recent article, and well, here it is:

The result of the last prompt should have been expected to be gibberish. But the random words are a bit disturbing. It looks like a poster for a Scandinavian heavy metal band concert. Especially the last one on the right.

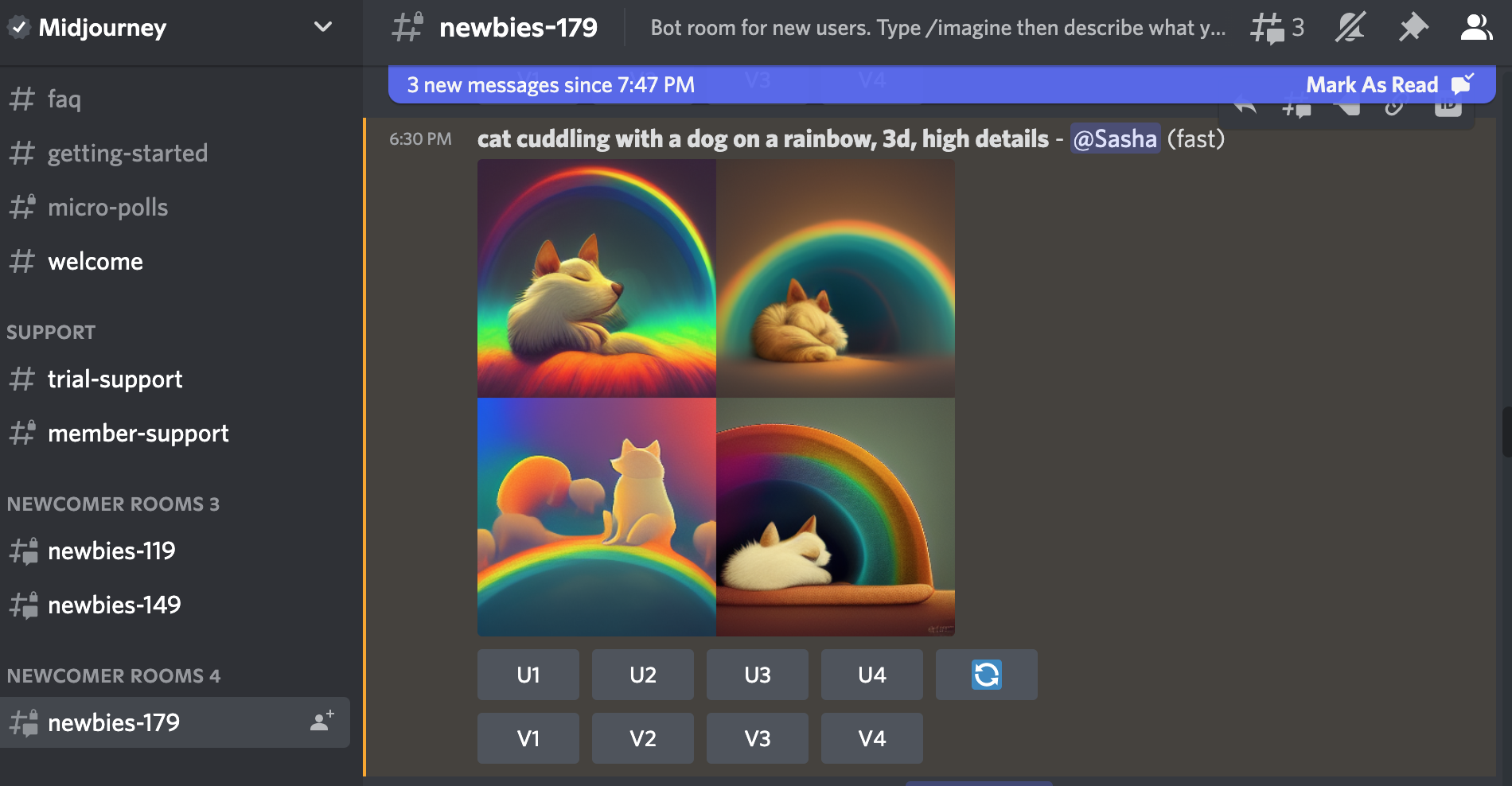

Midjorney

It took me a while to figure things out for this one. Maybe because I am not a Discord user, Midjorney relies heavily on it. You authenticate on the website via Discord and see good-looking but puzzling (for me) UI. It has a "greyed out" section, but I was unsure if it was because of the beta stage of the service or if I had to pay. The latter was the answer.

After paying for the membership, the next stage of confusion started. The service works via queries to a Discord bot. You can't generate images via the website. You send a message in a public chat with /imagine keyword and then the prompt text. The image generation takes substantially more time than DALL-E, but not too long.

After four images are generated, you are given two options per image:

U1,U2,U3,U4, to upscale an image.V1,V2,V3,V4to create four more image variations.

And an option to regenerate the images with new variations. All that is happening directly in the discord, but the results are eventually available on the Midjorney website.

Stable Diffusion

That is pure hacking, at least at this point. You need to clone the git repo and follow the instructions in the README file. The audience for this one is ML scientists and enthusiasts. I assume that from all the assumptions in the Requirements section of the README file. The instructions assume you have cloned the repo and installed a specific python dependency management system. Then you run two commands, and it is all working for ML scientists and enthusiasts, that is. I am not in that club; the story was different for me.

Installing Conda was no issue; using it was. I had a missing version of a package. So I googled and tried to fix it. Mac does not support that version of a package but supports an older one. I did not dare just to downgrade a random library, but further googling told me to do so with a caveat that:

this patch will <...> run on CPU when GPU is not enabled.

it will take approx an hour to generate results

So I gave up on trying to use Stable-Diffusion on my old beat-up Mac. I have a decent gaming PC, but I have to install Linux on it and give Stable-Diffusion another go.

Overall

The results are a mixed bag. Some images are mind-blowing, spectacular, and what I expected, like that image of a monkey scratching its head. Some are not exactly what I expected. Like that "Andy Warhol" image above. Some are just bizarre.

I think it is the same as using a search engine, everyone can do it, but it requires skill and practice to ask the right question. There is already a service that sells prompts for all three called Promptbase.

Here is a high-level comparison of all three:

| DALL-E 2 | Midjorney | Stable Diffusion | |

|---|---|---|---|

| Hosting | Online service | Online service | Self-hosted, command-line based |

| Usability | The easiest to use | Not so straightforward | Requires specific hardware and OS. Don't work well or at all on Mac |

| Price | 15 Free generations, 15$ for 115 generations | Subscription-based. Multiple tiers. 10 USD/month 200 images, 30 USD/Month unlimited with an asterisk | Free |

| Generation speed | Under 10 seconds | Around half a minute | Depends on your hardware |

Use and distribution (Copyrights)

Just for reference (I am no legal expert, so please make sure you check for yourself, I do not provide any legal advice)

- DALL-E terms of use say:

Use of Images. Subject to your compliance with these terms and our Content Policy, you may use Generations for any legal purpose, including for commercial use. This means you may sell your rights to the Generations you create, incorporate them into works such as books, websites, and presentations, and otherwise commercialize them.

... Subject to the above license, you own all Assets you create with the Services...

Is it useful?

Not all miracles of modern technology are equally helpful. I am not trying to advise or predict the general application of that tech. Especially since this technology is in its infancy. Here is how I plan to use it:

First, it is unbelievably fun. I got my hands on DALL-E 2 and spent an evening using all my credits. I plan to play with the tech more and dive deep into Stable-Diffusion that I yet have to install.

Second, besides fun, I plan to use it to generate images for my articles and presentations. I like visual support for the text, not just charts and tables. But fun images are engaging and help deliver a mood of an article or a presentation. You usually would google something you need, and if you don't care about copyrights, just use it (not ok!). Or pay for a service with a bunch of generic images for commercial use. Or if you are artistic, draw something yourself or take a nice photo. But now you can just say what you want, and chances are you will get something you'd like.

I also do not see any reason that in 10 years, most movies or cartoons will be at least partially generated. Why not? We have a synthetic voice already, and we have things like DeepFake, which generates unbelievably realistic videos. I do not see anything fundamental preventing some company from coming up with a standard for a movie script, which will be rendered and voiced automagically by machines. If anyone told you that Amazon was a major movie studio ten years ago, you would laugh, so why not this?

Conclusion

New technology might frighten, might excite, or people might just don't care. I am not trying to say that all new tech is good. Good is a relative term; as practice shows, any technology can help and can harm. I am aware of the outrage this particular tech caused in the artistic community. This summer, GitHub CoPilot was released, and many people in my field felt threatened. But I can't change the fact that the tech appeared, nor am I willing to get my torch and pitchfork and run after ML scientists to punish them for the heresy. We can't stop the progress, and personally, I don't want to. But I believe we need to be careful and responsible with the usage of it. Hopefully, our society and government will figure it out sooner rather than later. Or maybe, we can use ML and AI to help the government, eh?

Comments ()